This vignette describes the motivation for the splinetree package and introduces the algorithms used by the package. For a more practical guide to working with data using the functionalities of the splinetree package, see the vignettes Tree Building with splinetree and Forest Building with splinetree.

Motivation

Longitudinal studies, where an outcome of interest is repeatedly measured in the same subjects over time, play a key role in research across a variety of disciplines, including medicine, epidemiology, and the social sciences. In these studies, the trajectory of a longitudinal outcome over time can look very different in different members of the population. It is often useful to find factors that help explain this variation in growth patterns. We propose using longitudinal regression trees, specifically splinetree, to find population subgroups where group members follow similar longitudinal trajectory patterns and share common baseline covariate values. We also propose using spline forests to understand which baseline covariates can explain the most variation in trajectories.

Regression trees, which recursively partition a population into homogenous subgroups (nodes), are a promising approach to this problem, but many regression tree algorithms cannot be used for studying longitudinal trajectories. Longitudinal regression trees were first proposed by Segal (1992), who suggested minimizing within-node Mahalanobis distance of longitudinal response vectors. This method requires that all individuals in the sample have the same number of equally spaced measurements of the outcome, which is rarely the case in studies involving human subjects. Yu and Lambert (1999) eliminated this need for balanced data by first reducing the dimension of the outcome vector using smoothing splines and then maximizing within-node homogeneity of the smoothed trajectories. The work of Yu and Lambert is the basis for the algorithm employed by splinetree.

Algorithm

The method for building a splinetree model, which is invoked with every call to the function splineTree(), consists of two steps. First, the longitudinal response vector for each individual in the dataset is projected onto a spline basis, yielding a set of coefficients for each individual. Second, the coefficients from the projection are used in constructing a regression tree that maximizes the within-node homogeneity of the projected trajectories.

Spline Projection

Yu and Lambert suggested treating each response vector as a functional curve \[Y_i(t) = f_i(t) + \epsilon_i(t)\] where \(f_i(t) = \sum_{k = 1}^q\beta_{ik} X_k(t)\) for a set of basis functions, \(X_k(t)\) and coefficient vector \(\boldsymbol{\beta}_i = (\beta_{i1},...,\beta_{iq})^T\), and where \(\epsilon_i(t)\) is a white noise process with mean zero and constant variance. The first step of splineTree() transforms the user-provided longitudinal dataset (in long format) into a wide format dataset where each individual is defined by their coefficient vector \(\boldsymbol{\hat{\beta}}_i = (\hat{\beta}_{i1},...,\hat{\beta}_{iq})^T\). In the final regression tree, terminal nodes are labeled with average coefficient vectors \(\boldsymbol{\bar{\beta}} = (\bar{\beta}_{1},...,\bar{\beta}_{q})^T\) over the individuals in the node. These average coefficients, along with the tree-wide basis functions \(X_k(t)\), describe the average trajectory for individuals whose covariate values place them in this node.

The splinetree package uses B-Splines (built using splines::bs()), rather than smoothing splines as originally suggested in Yu and Lambert, for the basis functions \(X_k(t)\) and obtains the coefficients by regressing each individual’s longitudinal response on these common basis functions. Whereas the original dataset may have a irregular number of observations per individual, the transformed dataset is balanced; each individual has the same number of estimated coefficients, all of which correspond to a common set of basis functions.

A consequence of flattening the data in this way is that baseline covariates used for splitting the tree cannot be time-varying. When splineTree() is called it expects a dataset in long format where the baseline covariates it can split on take on the same value in every row corresponding to a certain individual. In order to include information about time-varying covariates in the tree, summary measured can be defined and included as split variables. For example, instead of including time-varying yearly income as a split variable, we could define a new time-constant variable for each individual such as starting income, average income, or average yearly increase in income. Alternatively, if income is a non-linear, one could smooth those variables with a spline basis and use the coefficients as split variables (at the expense of interpretability).

One unique feature of the splineTree() function is that the user can specify whether or not the set of basis functions \(X_k(t)\) includes the intercept function. This allows the user to explore relationships with shape, separate from level. When the intercept is included, the splits of the tree take into account both the shape and the level of the longitudinal response trajectory, and the terminal nodes of the tree describe full trajectories that can be used for prediction. When the intercept is excluded, the splits of the tree take into account only the shape of the trajectories. In this case, the predicted coefficients for terminal nodes describe trajectories that are equal to \(0\) when \(t=0\), which will typically not provide reasonable prediction values. To obtain rough predictions from intercept-less trajectories, the mean starting response for all individuals in the node can be added onto the trajectory after the fact as an estimated intercept.

The set of basis functions \(X_1(t), ..., X_q(t)\) is determined by parameters to the splineTree() function which are passed forward to the bs() function. The degree parameter, with default equal to 3 for a cubic spline, specifies the degree of the polynomials for the spline basis. The boundary knots for the spline basis are set to be the minimum and maximum values that the time variable takes on in the dataset, and no internal knots are included in the spline basis as a default. In order to accomodate more complex trajectory patterns, a vector of internal knot locations can be provided through the knots parameter. Alternatively, internal knots can be added using the df parameter. If provided, the df parameter sets q (the number of basis functions), and so the number of internal knots is set to be df-degree-intercept. In this case, the appropriate number of knots are placed automatically at percentiles of the time variable to break up the data into equally size groups. The parameters knots and df should not be simultaneously provided; one or the other should be used to add internal knots.

The tree-building process seeks to maximize homogeneity of smoothed trajectories within nodes; if the projected trajectories poorly approximate the real data, the tree will not be useful. Therefore, the choice of parameters such as degree and df should be guided by knowledge of the trajectories being modeled. If the trajectories are suspected to be non-monotonic, a linear basis with no knots is a poor choice. Although more complex bases allow the individual projections to more closely model the individual response vectors, overly complex bases should be avoided. If the number of coefficients (\(q\)) exceeds the number of observations for a certain individual, the individual will be assigned NA coefficient values and this individual will be ignored during the tree-building process. To avoid throwing out data, \(q\) should be less than the minimum number of observations per individual. Even an individual with many observations may be assigned NA coefficients if they have no observations to one side of an internal knot. Individuals with only one observation to one side of an internal knot can be assigned extremely large coefficients that affect the tree-building process. For these reasons, before choosing a basis it is important to ensure that individuals in the dataset have enough observations to justify the spine basis, and that these observations are spread out enough to support the placement of internal knots.

Split Criteria

Once the data has been transformed into a matrix of spline coefficients, a regression tree is built. The tree building process employed by splinetree uses the same exhaustive search algortihm as CART, from Brieman et al. (1984), but with a modified measure of node purity. CART is implemented in the popular rpart package (T. Therneau and Atkinson (2018)). The splinetree package is built on top of rpart using the custom split function framework explained in T. M. Therneau (2018), and so a splinetree model is actually an object of class rpart.

As in CART, at each node the splinetree algorithm computes a split score for all possible ways to separate the data based on the split covariates. The score is based entirely off of the smoothed response curves described by the spline coefficients; the actual response data is never seen by the split function. The split score is based on the reduction in the sum of squared errors of the smoothed trajectories in the node around the mean smoothed trajectory in the node. The errors are evaluated at a set of fixed grid points. If \(N\) denotes the data found in the current node, \(L\) denots the data found to the left of the split, and \(R\) denotes the data to the right of the split, the split score is \(SS_N - SS_L - SS_R\). The value \(SS_S\) for a set \(S\) is defined as

\[ SS_S = \sum_{i \in S}(\mathbf{X}\hat{\boldsymbol \beta}_{i} - \mathbf{X}\bar{\boldsymbol{\beta}}_{S} )^T(\mathbf{X}\boldsymbol{\beta}_{i} - \mathbf{X}\bar{\boldsymbol{\beta}}_S )= \sum_{i \in S}(\boldsymbol \beta_{i} - \bar{\boldsymbol{\beta}}_{S} )^T\mathbf{X}^T\mathbf{X}(\boldsymbol{\beta}_{i} \ - \bar{\boldsymbol{\beta}}_{S})\]

where the matrix \(\mathbf{X}\) contains the values of the basis functions \(X_1(t), ..., X_k(t)\) evaluated at the chosen fixed time points on a grid \((t_1,...,t_m)\), the \(\hat{\boldsymbol{\beta}}_i\) are the spline coefficients for individual \(i\), and \(\bar{\boldsymbol{\beta}}_S\) is the mean coefficient vector for all individuals in set \(S\). The grid points can be specified using the parameter nGrid, which specifies the number of grid points to be placed automatically at percentiles of the time variable, or the parameter gridPoints, which provides a vector of specific time points.

At the end of the exhaustive search, the split with the highest split score (meaning that it provides the largest decrease in the projection sum of squared errors) is selected as the split for the current node. The process is then repeated recursively. For computational efficiency, not every possible partition of categories is considered for a categorical variable. The cateogries are first placed into an approximate order based on the magnitude of the average coefficients of data belonging to each category, and then only binary splits along the ordering are considered.

Apart from the custom split score, other behavior of the tree building process mimics that of rpart. For example, missing covariate values are handled with surrogate splits (see the rpart documentaiton for more details). The size of a splinetree can be controlled with the complexity parameter, cp, which is passed forward to rpart. A large splinetree can also be pruned with the rpart::prune() method. Since the rpart tree is built using a flattened version of the original data, the rows of an rpart-created attribute such as tree$where will correspond to the rows of the flattened data, not the original data. For users who are familiar with the rpart package and wish to use features such as tree$where, the flattened dataset that indexes the tree$where vector can be found in tree$parms$flat_data.

Although a splinetree model is an rpart object, not all rpart functions will have the expected behavior when applied to a splinetree model. Numerous splinetree functions have been provided to make the models easier to work with. For example, print.rpart() will not print out the full vector of predicted coefficients for each node of a splinetree model, and so the splinetree::stPrint() method is preferable. Similarly, predict.rpart() will fail to return the full vector of coefficients associated with a node, and so splinetree::predictCoeffs() is preferable.

Example

In this section, we will show how to use splineTree() to build a tree. More details on customizing, visualizing, and evaluating splinetree models can be found in the vignette Tree Building with splinetree.

This example uses data taken from the National Longitudinal Survey of Youth, 1979 (NLSY). The longitudinal trajectory of interest is body mass index (BMI) over time. We randomly sample 1,000 individuals from the NLSY out of those who have non-missing BMI data at at least 10 timepoints spread out over at least 20 years. We are interested in the relationship between BMI trajectories and time-constant variables such as HISP, WHITE, BLACK (indicator variables for subject’s reported race), SEX (indicator for subject’s reported sex), Num_sibs (number of siblings), and HGC_FATHER and HGC_MOTHER (reported highest grade completed by subject’s father and subject’s mother).

Previous research by Clarke et al. (2008) suggests that adult BMIs tend to increase steadily throughout early adulthood and flatten out in later adulthood. This type of trajectory can be modeled with a piecewise linear trajectory, so we will define our spline basis with degree = 1 and one internal knot. Since we do not have a particular location in mind for the internal knot, we use the df parameter rather than the knots parameter. This will place the knot at the median age. If we include an intercept, we will need to let df = 3 to add one internal knot, but if we do not include an intercept df = 2 will suffice. In this example, we build one tree with an intercept and one tree without an intercept so as to compare the two. The default value in the rpart package for the cp parameter, which controls the size of the tree, is 0.01. Here, we set the value of cp slightly lower to create trees that are large enough to be interesting but small enough to view in a plot.

library(splinetree)

## Loading required package: rpart

## Loading required package: nlme

## Loading required package: splines

## Registered S3 method overwritten by 'mosaic':

## method from

## fortify.SpatialPolygonsDataFrame ggplot2split_formula <- ~HISP + WHITE + BLACK + SEX + Num_sibs + HGC_FATHER + HGC_MOTHER

tformula <- BMI ~ AGE

sample_tree <- splineTree(split_formula, tformula, idvar = "ID",

data = nlsySample, degree = 1, df = 2,

intercept = FALSE, cp = 0.005)

sample_tree_intercept <- splineTree(split_formula, tformula, idvar = "ID",

data = nlsySample, degree = 1, df = 3,

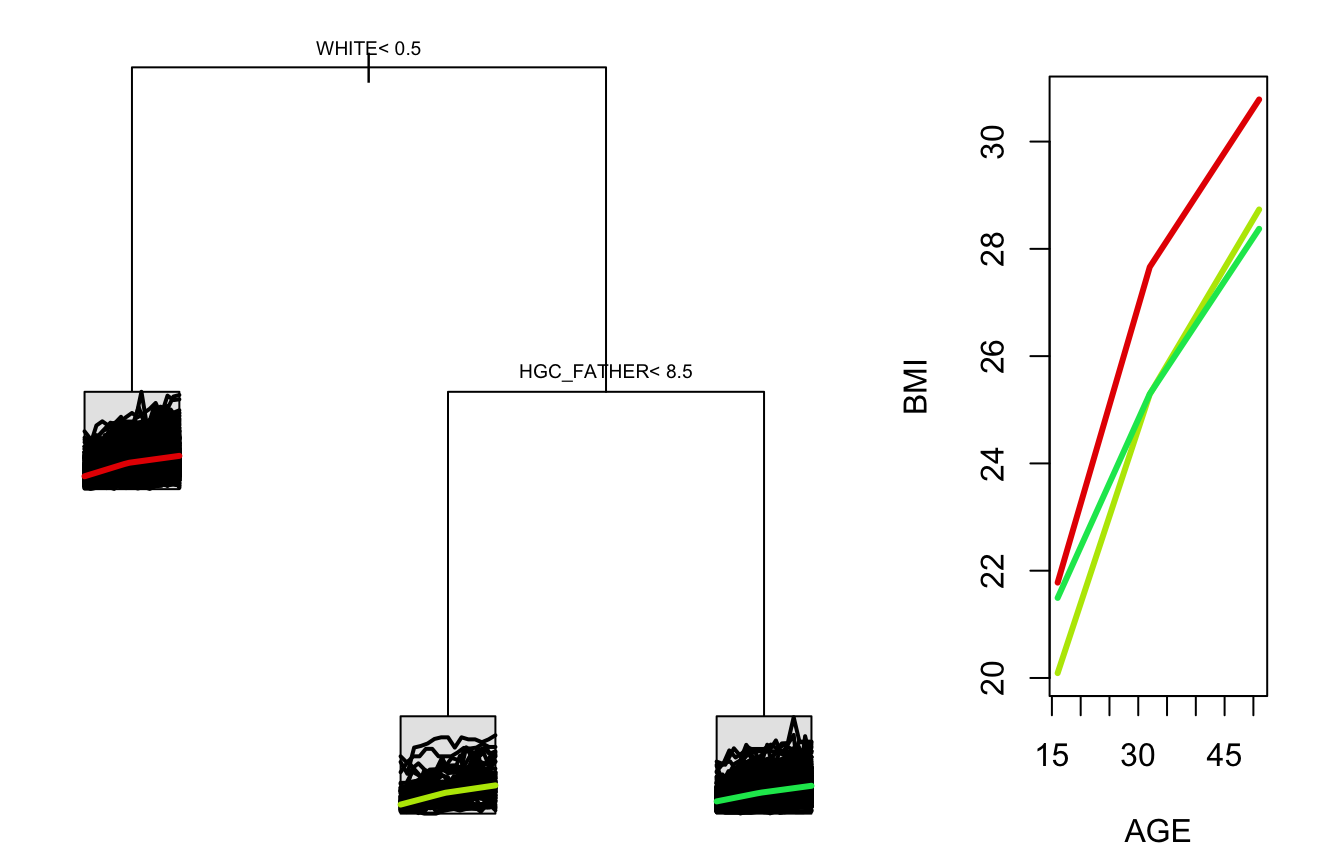

intercept = TRUE, cp = 0.005)After building these two trees, we can view a printed summary or a plot of each. Beginning with the no-intercept tree, we see that non-white individuals are predicted to have more rapid growth in body mass index than white individuals. Among white individuals, those whose fathers did not complete more than 8.5 years of schooling show more rapid BMI growth. Since this tree was built without an intercept, the average starting BMI is added to each average trajectory in the plot.

stPrint(sample_tree)

## n= 1000,

##

## node), split, n , coefficients

## * denotes terminal node

##

## 1) root, 1000, (4.961796, 8.091247)

## 2) WHITE< 0.5, 505, (5.882807, 9.006190)*

## 3) WHITE>=0.5, 495, (4.022179, 7.157821)

## 6) HGC_FATHER< 8.5, 78, (5.198284, 8.642817)*

## 7) HGC_FATHER>=8.5, 417, (3.802188, 6.880053)*

stPlot(sample_tree)

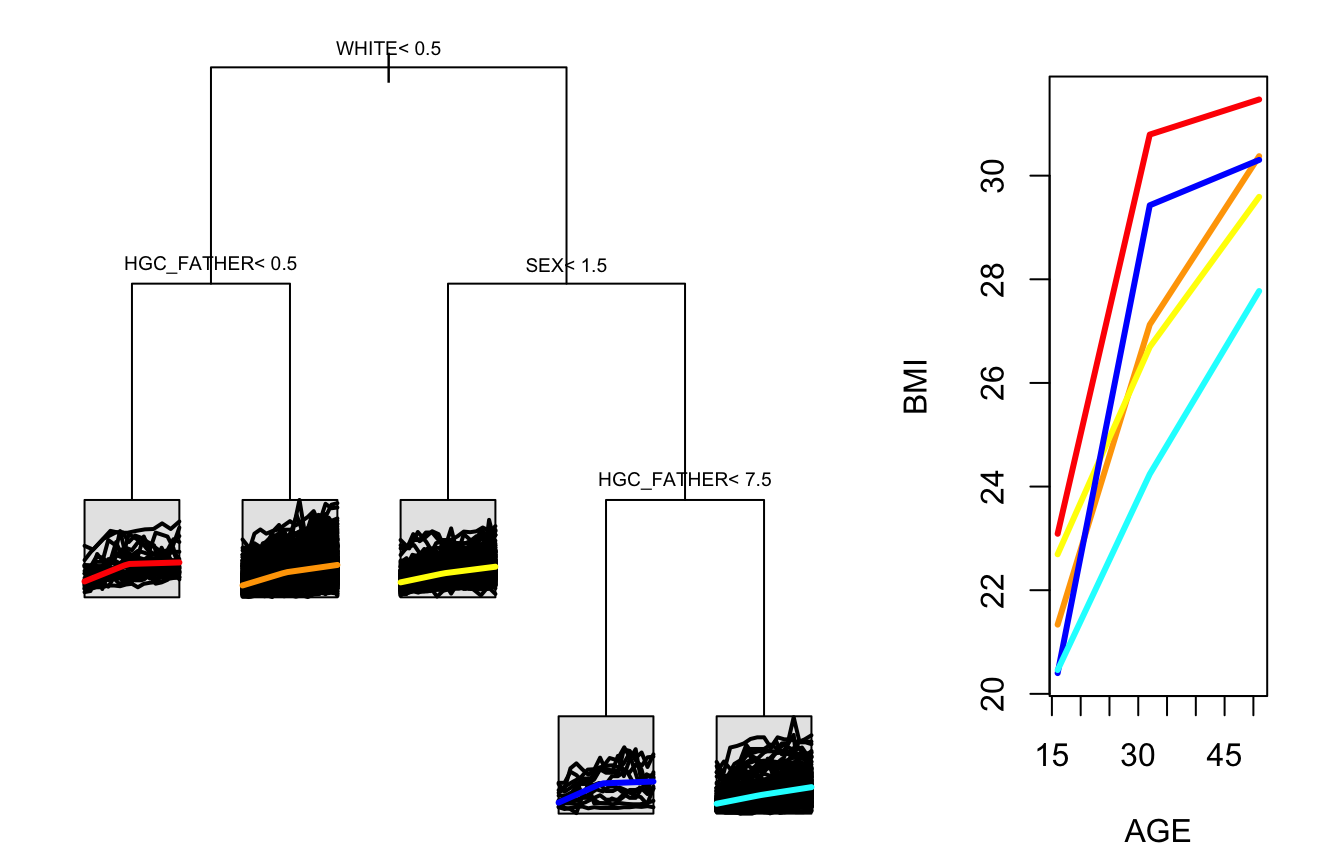

In the tree that is built with an intercept, each node is associated with three coefficients instead of two. While we see two of the same variables that were in the no-intercept tree included here, we now also see sex playing a role. This suggests that sex may impact the level of BMI but not the shape of the trajectory.

stPrint(sample_tree_intercept)

## n= 1000,

##

## node), split, n , coefficients

## * denotes terminal node

##

## 1) root, 1000, (21.52416, 4.961796, 8.091247)

## 2) WHITE< 0.5, 505, (21.42300, 5.882807, 9.006190)

## 4) HGC_FATHER< 0.5, 25, (23.08928, 7.703289, 8.380706)*

## 5) HGC_FATHER>=0.5, 480, (21.33622, 5.787990, 9.038767)*

## 3) WHITE>=0.5, 495, (21.62736, 4.022179, 7.157821)

## 6) SEX< 1.5, 259, (22.69360, 3.999510, 6.896897)*

## 7) SEX>=1.5, 236, (20.45721, 4.047057, 7.444175)

## 14) HGC_FATHER< 7.5, 12, (20.40041, 9.027571, 9.903029)*

## 15) HGC_FATHER>=7.5, 224, (20.46025, 3.780243, 7.312451)*

stPlot(sample_tree_intercept, colors=c("red", "orange", "yellow", "blue", "cyan"))

Forests

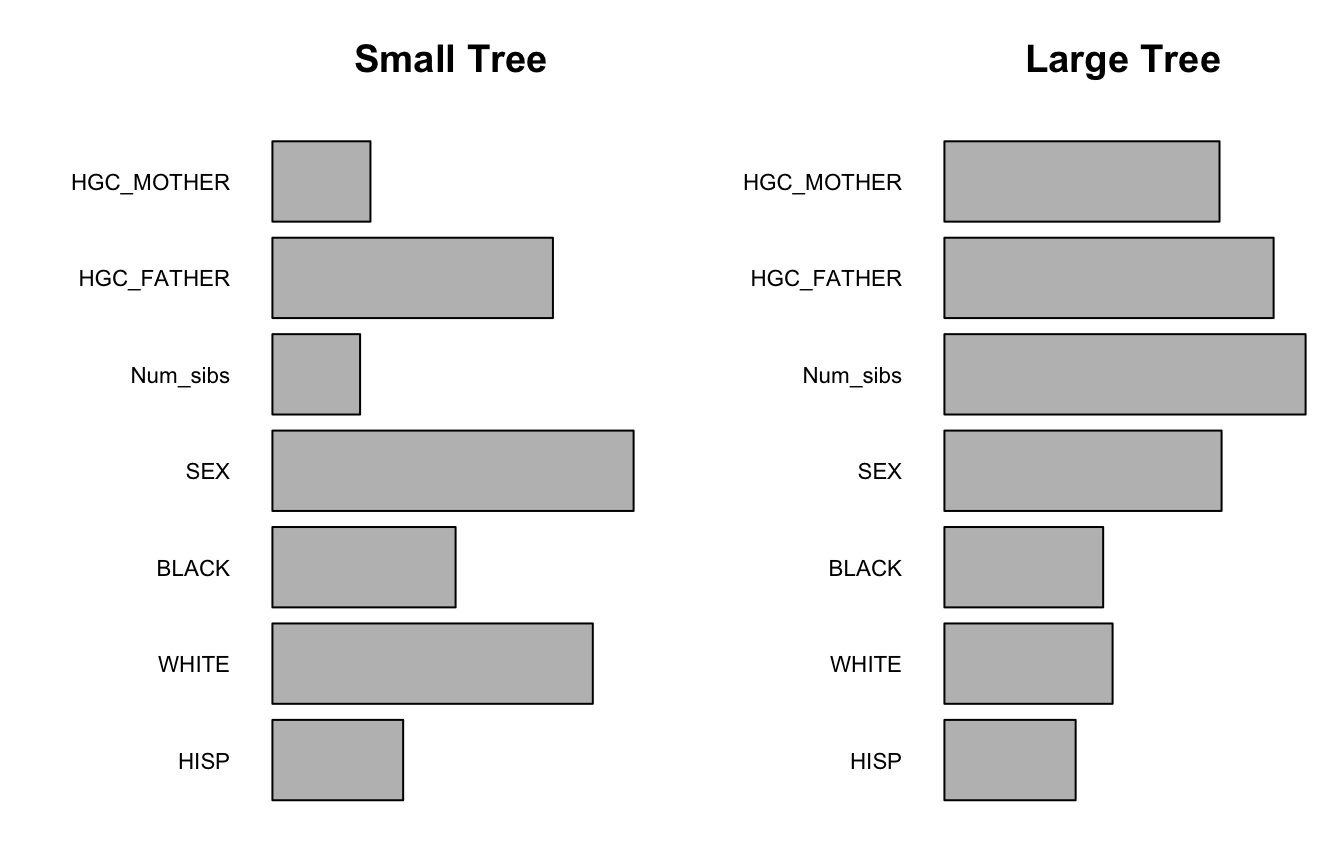

The examples above suggest that the variables WHITE and HGC_FATHER are most associated with the shape of a BMI trajectory, and that these same variables, with the addition of SEX, are associated with the BMI level. However, a single regression tree gives a poor overview of the true importance of covariates. If there is a close tie between the “goodness” of two possible splits at the top level of the tree, then a new tree built to a slightly perturbed dataset could include an entirely different set of covariates. If two covariates that are associated with the trajectories are highly correlated, it is likely that only one will appear in the tree.

The rpart package has a built in variable importance metric based on improvements of goodness of fit provided by both split varaibles and surrogate split variables (see the rpart documentation for more information). While this measure helps capture the importance of second-best or correlated covariates that do not appear in the tree itself, this measure can show bias towards variables with more unique values; a very important binary variable that is used at the top level of a tree is not available for surrogate splits later in the tree, and so its importance according to this metric may be smaller than a less important numeric variable that is repeatedly used as a surrogate split throughout the tree. A consequence of this bias is that the rpart variable importance rankings are highly dependent on the size that a tree is pruned to; in larger trees, variables with many unique values can appear over and over again as primary or surrogate splits, which inflates their importance. This bias is illustrated in the plot below, which displays the rpart variable importance metric for the sample_intercept_tree from above along with an identical tree that is allowed to grow much larger. While SEX is rated to be the most important variable in the small tree, when the tree is allowed to grow larger the importance of all three non-binary variables surpasses the importance of SEX. This is an example of a general trend; as trees grow larger, the rpart importance of variables with many unique values is inflated relative to variables with fewer unique values.

extra_large_tree <- splineTree(split_formula, BMI ~ AGE, "ID", nlsySample, degree = 1, df = 2, intercept = TRUE, cp = 0.0005)

vars = attr(terms(split_formula), "term.labels")

imp_large=extra_large_tree$variable.importance

imp_small=sample_tree_intercept$variable.importance

In the univariate setting, Random Forests and the associated permutation importance measure are popular ways to obtain more stable measures of variable importance (see Breiman (2001) or Liaw and Wiener (2002) for more). Through random data subsampling and random variable selection, Random Forests give variables that are not locally optimal in a single tree a chance to appear in a tree. The splineForest() method allows users to build ensembles of spline trees, combining the projection splitting framework of splineTree() with the random subsampling and random variable selection principles of a Random Forest.

Spline Forest Implementation

Once a user is familiar with the splineTree() function, using the splineForest() function is straightforward; most of the parameters are identical. Although a spline forest is an ensemble of spline trees, a call to splineForest() does not involve repeated calls to splineTree(). The projection process happens only once in a forest, and the random subsampling occurs on the level of the flattened data, not the individual responses. This ensures that full trajectories are kept together in the forest building process. Following the work of Strobl et al. (2007), we perform random subsampling without replacement, and we subsample 63.5% of the data for each tree (this matches the proportion of data that we would expect to be used for each tree if we used the more traditional practice of bootstrap sampling). Strobl et al. (2007) show that sampling without replacement reduces bias in the permutation importance metric when compared to bootstrap sampling. By setting the parameter bootstrap=TRUE, users can choose to use bootstrap sampling instead.

The ntree parameter specifies the number of trees in the ensemble; ntree random samples are drawn from the flattened data and a tree is built to each of these samples. Each tree uses the same split criteria as the splineTree() function but incorporates a random component into the split selection. The prob parameter determines the probability that any one variable is put into contention to be the split variable at a node. An appropriate value for the prob parameter depends on the number of total split variables. If there are only three total split variables and prob = 1/2, then there is a \(1/8\) chance that no variables will be considered for a split and the tree building will terminate prematurely. However, with a large number of split variables, a probability of around \(1/3\) is appropriate. This method for incorporating random variable selection is different than the method implemented in the randomForest package (Liaw and Wiener (2002)), where the number of variables to be considered at each node is specified, not left up to chance. The choice to use a probability arose from the desire to stay within the rpart custom-split framework; the probability version could be easily implemented without modifying the base rpart code.

Example

We build an example spline forest using the same dataset introduced above, using a linear spline basis with one internal knot and an intercept.

forest <- splineForest(split_formula, tformula, idvar = "ID", data = nlsySample, degree = 1, df = 3, intercept = TRUE,ntree = 50, prob = 0.5, cp = 0.005)This model returned by this function is a named list with 15 components. The individual trees in the forest are saved in forest$Trees. Looking at forest$Trees[[1]], we see that, due to the bootsrapping and the randomization, new variables appear that did not appear in a single tree.

stPrint(forest$Trees[[1]])

## n= 1000,

##

## node), split, n , coefficients

## * denotes terminal node

##

## 1) root, 1000, (21.59373, 4.833041, 7.836891)

## 2) WHITE< 0.5, 497, (21.59589, 5.652020, 8.604274)

## 4) HGC_MOTHER< 9.5, 193, (22.26560, 6.334509, 9.166461)

## 8) HISP< 0.5, 72, (22.18153, 7.305519, 10.665140)*

## 9) HISP>=0.5, 121, (22.31563, 5.756718, 8.274684)

## 18) HGC_FATHER< 7.5, 79, (21.94670, 5.453582, 7.584180)*

## 19) HGC_FATHER>=7.5, 42, (23.00957, 6.326903, 9.573488)

## 38) HGC_FATHER< 11.5, 19, (23.68207, 9.432358, 13.554250)*

## 39) HGC_FATHER>=11.5, 23, (22.45403, 3.761527, 6.285032)*

## 5) HGC_MOTHER>=9.5, 304, (21.17070, 5.218730, 8.247359)

## 10) HGC_FATHER< 15.5, 287, (21.12267, 4.994298, 8.061879)

## 20) BLACK< 0.5, 84, (21.38371, 4.070658, 6.517687)

## 40) HGC_MOTHER< 11.5, 33, (23.54127, 4.159233, 4.867263)*

## 41) HGC_MOTHER>=11.5, 51, (19.98765, 4.013345, 7.585609)*

## 21) BLACK>=0.5, 203, (21.01466, 5.376493, 8.700855)

## 42) Num_sibs< 0.5, 12, (21.92762, 9.258605, 15.084090)*

## 43) Num_sibs>=0.5, 191, (20.95730, 5.132591, 8.299814)*

## 11) HGC_FATHER>=15.5, 17, (21.98156, 9.007670, 11.378700)*

## 3) WHITE>=0.5, 503, (21.59159, 4.023831, 7.078662)

## 6) Num_sibs< 1.5, 119, (21.34069, 3.503172, 7.025591)

## 12) HGC_FATHER< 7.5, 9, (21.95224, 7.747427, 13.376170)*

## 13) HGC_FATHER>=7.5, 110, (21.29066, 3.155915, 6.505998)

## 26) SEX< 1.5, 65, (21.92471, 3.813171, 6.449284)*

## 27) SEX>=1.5, 45, (20.37481, 2.206546, 6.587919)*

## 7) Num_sibs>=1.5, 384, (21.66934, 4.185181, 7.095109)

## 14) SEX< 1.5, 198, (22.70250, 4.063532, 6.686761)*

## 15) SEX>=1.5, 186, (20.56953, 4.314678, 7.529801)

## 30) HGC_FATHER< 8.5, 27, (21.22512, 7.198432, 11.651630)

## 60) HGC_MOTHER< 8.5, 12, (23.00307, 8.670758, 16.124130)*

## 61) HGC_MOTHER>=8.5, 15, (19.80277, 6.020571, 8.073624)*

## 31) HGC_FATHER>=8.5, 159, (20.45821, 3.824984, 6.829869)*While each tree within forest$Trees is an rpart object that can be printed with stPrint, these trees are not the same as splinetree model. A single splinetree tree stores information about the spline basis used and the flattened dataset within it. For the sake of eliminating redundancy in already large splineforest models, this information is not stored in every tree in a forest; it is only saved once at the forest level (see, for example, forest$innerKnots and forest$flat_data). Many splinetree functions, including stPlot(), expect this additional information to be stored at the tree level, and therefore stPlot(forest$Trees[[1]]) will result in an error.

To determine which data points were used in the making of the first tree in the forest, we can use forest$index[[1]]. The indices found in this vector refer to rows of forest$flat_data. It is important to know which data points were used in the making of each tree because this can determine the predictions from the forest. In obtaining predicted responses for a datapoint that was in the training sample, we can average the predictions from each individual tree in the dataset, or we can only average predictions across trees in the forest for which this datapoint was “out-of-the-bag,” meaning that it was not in the boostrap sample. “Out-of-the-bag”" (or “oob”) predictions are suggested by Breiman (2001) because they provide a sense of out-of-sample performance of the forest without using a distinct test set. In the splinetree package, we can predict BMIs for each individual in the NLSY sample either using “all” trees for each datapoint, only trees for which this datapoint was “out of the bag,” or only trees for which this datapoint was “in the bag” (or “itb”).

fullpreds <- predictYForest(forest, method = "all")

oobpreds <- predictYForest(forest, method = "oob")

itbpreds <- predictYForest(forest, method = "itb")If we look at these predictions, we see that the “oob” prediction errors are greater, but this lower performance is more representative of how our forest might perform on a new test set.

Variable Importance

Our key motivation in building a spline forest was to figure out which variables are most importantly associated with heterogeneity in BMI trajectory. The splinetree package implements a variable importance metric closely related to that of Breiman (2001) and Liaw and Wiener (2002).

For every tree in the forest, tree performance is measured on out-of-the-bag data points. Then, the values of variable \(V\) are randomly permuted, and tree performance is re-measured. The average difference in performance over all trees in forest becomes the variable importance score for variable \(V\). The splinetree package provides scores using the absolute difference in performance, the percent difference in importance, and the standardized difference in importance (differences divided by their standard deviation). In most cases, these three metrics will rank the variables in the same way, and so the choice is a matter of preference. A variable importance score reported as negative should be interpreted as a variable importance score of \(0\).

Two different definitions of tree “performance” can be used. If the forest’s spline basis includes the intercept, variable importace can be measured with respect to predicting the response outcome. In this case, the tree performance metric used is the Mean Squared Prediction Error (MSE), as in Liaw and Wiener (2002). This version is implemented in the function varImpY().

Alternatively, we can measure tree performance using the projection sum of squared errors. The advantage of this metric is that it can be used whether or not the forest includes an intercept. When used on a non-intercept forest, it measures the importance of each variable in determining the shape of a trajectory. When used on a forest with an intercept, there is an option to ignore the intercept in the variable importance calculations. Therefore, even though the sample forest we have been working with includes an intercept, we can still calculate a measure of shape-based variable importance.

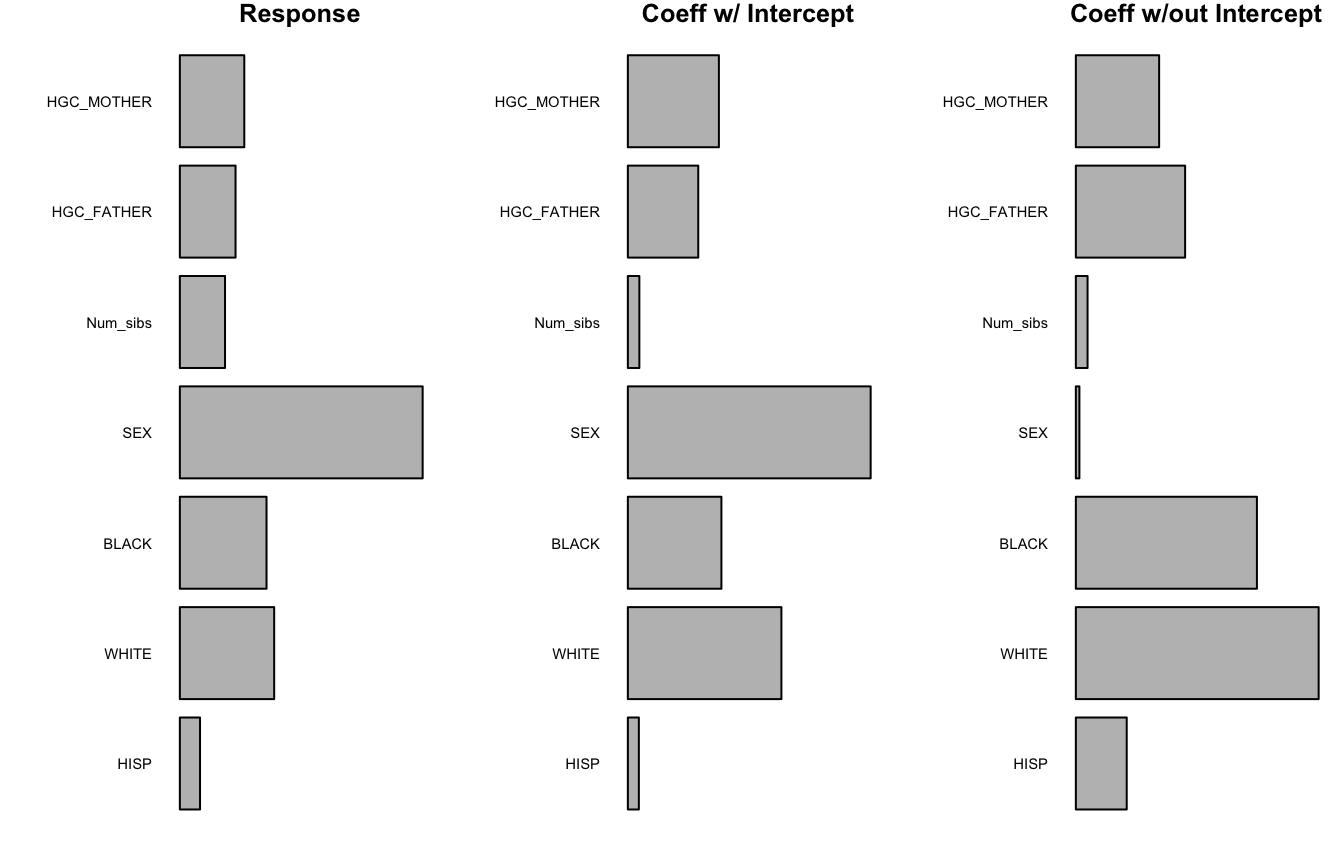

We can create three different variable importance matrices using our sample forest. We can then compare the response-based importance to the projection-based importances (both including and ignoring the intercept). Each of these importance matrix contains three columns, corresponding to absolute differences in performance, percent differences in performance, and standardized differences in importance. We will use the third column.

Y_imps <- varImpY(forest) #on the slow side

coeff_imps <- varImpCoeff(forest, removeIntercept = FALSE)

shape_imps <- varImpCoeff(forest, removeIntercept = TRUE)par(mfrow = c(1,3))

plotImp(Y_imps[,3], main = "Response")

plotImp(coeff_imps[,3], main ="Coeff w/ Intercept")

plotImp(shape_imps[,3], main = "Coeff w/out Intercept")

Using a splineForest, some new variables have relatively high importance. For example, while HGC_MOTHER did not appear in a splineTree, it has a similar overall imporance to HGC_FATHER. These two variables may be unlikely to appear in the same tree due to their correlation (0.667 among complete cases), but the permutation importance metric shows that both variables are associated with the level and the shape of the outcome. The first two panels of the graph are quite similar because they both take into account level and shape; the difference is that the first panel looks at how influential the variables are for approximating BMI values, whereas the second panel looks at how influential the variables are for approximating the smoothed BMI trajectories. The fact that the panels look similar is good because it suggests that the smoothed trajectories used are reasonable approximations of true BMI.

The third panel of the graph shows more differences. When the intercept of the smoothed trajectories is ignored, the SEX variable loses all importance. This mirrors the observation from our splineTree, where SEX appeared in the intercept tree but not the no intercept tree. Now that we are using a forest, we can more confidently assert that reported sex is associated with the level of BMI but not the shape of the trajectory over time.

Conclusion

The splinetree package allows users to build regression trees and random forests for longitudinal trajectories. The spline basis method is flexible enough to accomodate complex trajectory shapes, and the models can be used to study just the shape of the trajectories, or to study shape and level simultaneously. For more details on the functionalities of the package, see the vignettes Tree Building with splinetree and Forest Building with splinetree.

References

Breiman, Leo. 2001. “Random Forests.” Machine Learning 45 (1). Springer: 5–32.

Brieman, L, J Friedman, R Olshen, and C Stone. 1984. “Classification and Regression Trees. Belmont (ca): Wadsworth.” Google Scholar.

Liaw, Andy, and Matthew Wiener. 2002. “Classification and Regression by RandomForest.” R News 2 (3): 18–22.

Segal, Mark Robert. 1992. “Tree-Structured Methods for Longitudinal Data.” Journal of the American Statistical Association 87 (418). Taylor & Francis Group: 407–18.

Strobl, Carolin, Anne-Laure Boulesteix, Achim Zeileis, and Torsten Hothorn. 2007. “Bias in Random Forest Variable Importance Measures: Illustrations, Sources and a Solution.” BMC Bioinformatics 8 (1). BioMed Central: 25.

Therneau, Terry M. 2018. “User Written Splitting Functions for Rpart.” Mayo Clinic URL https://cran.r-project.org/web/packages/rpart/vignettes/usercode.pdf.

Therneau, Terry, and Beth Atkinson. 2018. Rpart: Recursive Partitioning and Regression Trees. https://CRAN.R-project.org/package=rpart.

Yu, Yan, and Diane Lambert. 1999. “Fitting Trees to Functional Data, with an Application to Time-of-Day Patterns.” Journal of Computational and Graphical Statistics 8 (4). Taylor & Francis Group: 749–62.